The Evolution of Automatic Speech Recognition (ASR)

The Evolution of Automatic Speech Recognition (ASR)

Contents

- 1. Introduction: Why ASR Matters

- 2. Timeline Overview

- 3. Statistical Era (Before 2010): The GMM-HMM

- 4. Hybrid Era (2010–2015): The DNN-HMM

- Comments & discussions

1. Introduction: Why ASR Matters

Spoken language is the primary interface of human intelligence. For millennia, it was ephemeral—vanishing the moment it was uttered. Automatic Speech Recognition (ASR) changed that fundamental reality, allowing machines to capture, decode, and act upon the human voice.

For decades, ASR was viewed as one of the “AI-complete” problems—challenges that require a system to possess knowledge indistinguishable from a human. Speech is messy. It is riddled with coarticulation (sounds blending together), background noise, accents, disfluencies (“um,” “uh”), and the cocktail party problem.

Yet, today, we take it for granted. We speak to our phones, dictate our messages, and consume auto-generated captions on YouTube. This blog explores the technical odyssey that took us from fragile statistical systems to the robust, massive foundation models of today.

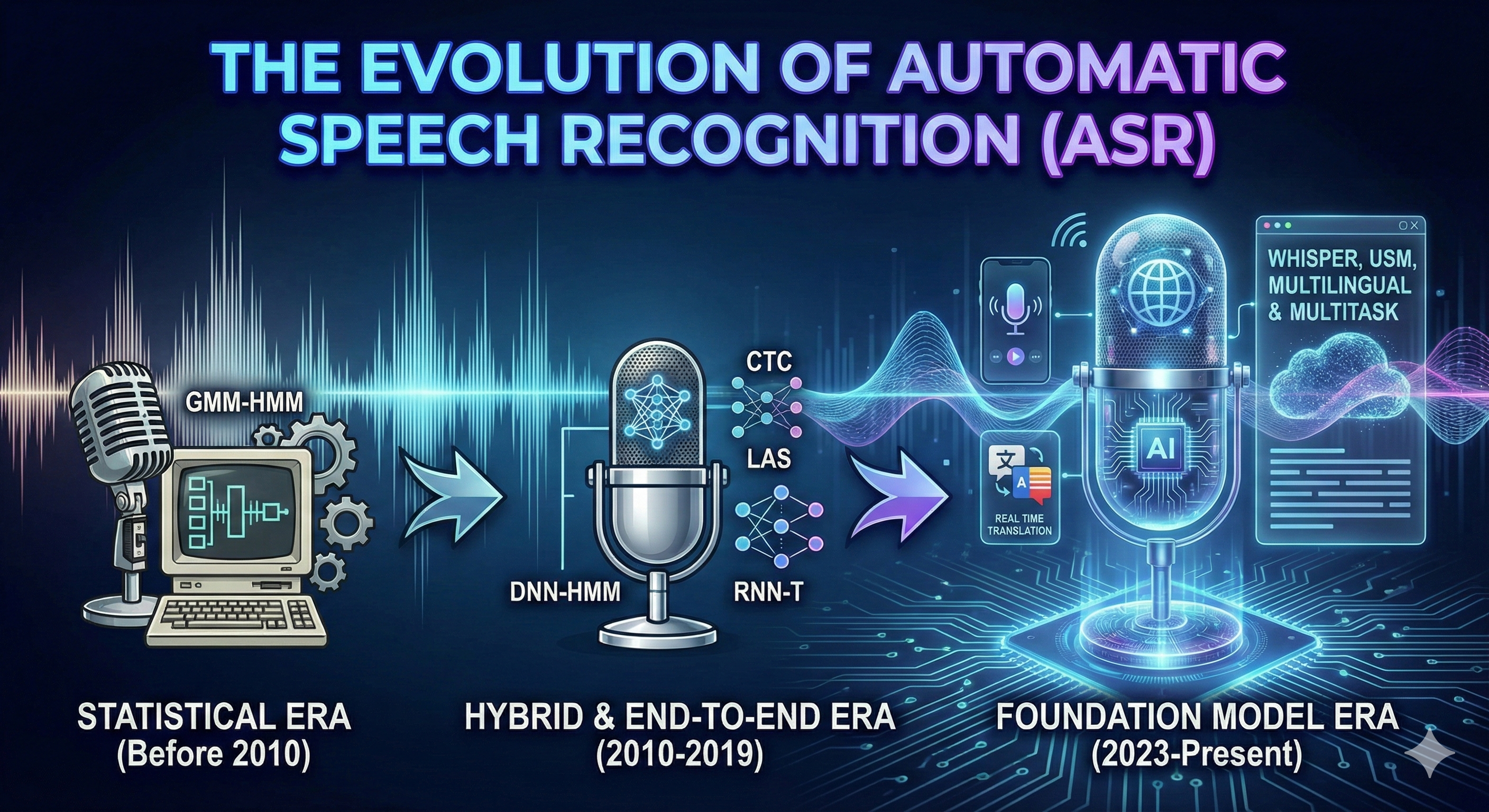

2. Timeline Overview

| Era | Time Period | Representative Models | Key Characteristics |

|---|---|---|---|

| Statistical | 1980s – 2010 | GMM-HMM | Hand-crafted features (MFCC), probabilistic independence assumptions. |

| Hybrid | 2010 – 2015 | DNN-HMM | Neural networks replaced GMMs for probability estimation; HMMs kept for alignment. |

| End-to-End | 2015 – 2019 | CTC, LAS, RNN-T | Single neural network maps audio to text; removed complex alignment pipelines. |

| Self-Supervised | 2020 – 2022 | wav2vec 2.0, HuBERT | Learning from raw, unlabeled audio; reduced dependency on labeled data. |

| Foundation | 2023 – Present | Whisper, USM, Canary | Massive scale (600k+ hours), weak supervision, multitask capabilities. |

Comments & discussions

Thank you for taking the time to read, please chat and give me your comments below ỏ in the comments link .

Comments powered by Disqus.